Social media engagement is the lifeblood of brands, influencers, and enterprises. But what happens when those heartwarming “likes” on your posts are coming from bots? Bot—like interactions, generated by automated scripts, can inflate metrics, skew algorithms, and erode trust. Traditional anti-bot tools? They’re falling short. In 2025, social platforms and digital businesses face a surge in fake engagement driven by AI-assisted automation, click farms, and low-cost bot networks. These bots no longer resemble simple scripts. Many now emulate human rhythms, device patterns, and realistic interaction flows.

This is where modern tools such as an extension that detects bot likes become increasingly important. They provide a level of client-side visibility that network-level or server-side detection cannot reliably deliver. But while extensions help diagnose engagement fraud, they cannot prevent it alone. To truly eliminate automated abuse, enterprises also require behavior-based verification and full bot management across all sensitive endpoints.

This article explains why traditional bot detection fails, how an extension that detects bot likes works under the hood, and what enterprises must do to defend against modern fake-engagement attacks.

Why Bot Likes Are Booming in 2025?

The year 2025 has seen bot likes evolve from crude spam to sophisticated psy-ops, thanks to generative AI models like those from OpenAI and emerging alternatives. According to Imperva’s 2025 Bad Bot Report, bad bots, malicious automated scripts, now account for 37% of global internet traffic, up 5% from 2024, with social platforms bearing the brunt. On sites like X (formerly Twitter), Facebook, and Weibo, AI-driven bots have quadrupled in activity since January, flooding feeds with fake positivity.

Key drivers include:

AI Accessibility: Tools like GPT-5 derivatives allow anyone to spin up bot farms for pennies, generating human-like profiles complete with stolen photos and contextual replies.

Economic Incentives: In a creator economy valued at $250 billion, fake engagement promises quick virality. Brands and politicians pay for “boosts” that can turn a post from obscurity to millions of impressions overnight.

Platform Algorithms: Systems on X and Meta prioritize high-engagement content, creating a feedback loop where bot likes propel low-quality posts to the top.

| Metric | 2024 Baseline | 2025 Increase | Source |

| Bot Traffic Share | 32% | 37% (+5%) | Imperva Bad Bot Report |

| AI Bot Requests | 2.6% of bots | 10.1% (+287%) | Equimedia AI Surge Report |

| Fake Likes per Bot Swarm | N/A | 45,000 (from 1,300 accounts) | Antibot4Navalny Analysis |

| Engagement Boost from Bots | N/A | +11% likes, +23% comments | Weibo Study |

Real-World Echoes on X

X, with its real-time pulse, offers raw glimpses into the bot wars. In late November 2025, users exposed foreign agitprop rings via temporary glitches revealing bot origins—massive farms buying likes to simulate grassroots support. Crypto promoters railed against “fake influence,” with one thread decrying how “every social media influencer should be paid based on verifiable on-chain actions instead of fake likes.”

Accusations of fake engagement circulate widely. Podcaster Kyle Kulinski has been accused of relying on a click farm to inflate his posts and obscure declining organic reach. K-pop fans criticize HYBE for allegedly using bot likes on Twitter to distort chart performance. High-profile political conflicts, including those involving Nick Fuentes, have drawn renewed scrutiny after abrupt surges in engagement. A spike of two thousand likes within 4 minutes is almost impossible to attribute to authentic user behavior and strongly suggests automation at work.

The Impact on Businesses and Platforms: Beyond Vanity Metrics

Misleading Engagement Data: Inflated KPIs make it impossible to accurately gauge campaign performance, leading to misguided marketing investments.

Significant Ad Spend Waste:Bots clicking on ads drive up costs without any potential for conversion, directly harming marketing ROI.

Erosion of Platform Trust: When users perceive that engagement is inauthentic, their trust in the platform and the brands advertising on it diminishes.

How Bot Likes Work: Modern Automated Engagement in 2025

Bot likes operate through a multi-step pipeline that prioritizes stealth and scale. Here’s how they typically unfold:

- Account Creation and Farm Building: Operators start by generating or acquiring “zombie” accounts en masse. These are low-profile profiles seeded with basic human-like activity, such as sporadic posts or follows, to build credibility. In 2025, AI tools like automated profile generators use large language models (LLMs) to craft bios, profile pictures (often AI-synthesized faces), and initial content that passes platform scrutiny. Farms can scale to thousands of accounts via cloud services, with each one “aged” over weeks to avoid new-account flags.

- Targeting and Intelligence Gathering: Bots don’t like blindly. Advanced systems scrape public data via APIs or web crawlers to identify high-value targets, such as trending posts, niche hashtags, or competitor content. Semantic analysis—powered by models like GPT variants—ensures likes align with “relevant” topics, like environmental activism or K-pop trends. For instance, a bot might prioritize posts with under 100 likes to simulate organic early buzz.

- Execution and Distribution: The like action itself is a lightweight API call or simulated browser interaction. Bots distribute actions across accounts using residential proxies (real user IPs) and randomized timing to evade rate limits. A single campaign might involve 1,000 likes doled out over hours, with variations in session length (e.g., 5-15 minutes) and “human pauses” between clicks. On TikTok, for example, bots auto-like videos in the For You Page, triggering algorithmic pushes to more users.

- Amplification Loops: Post-like, bots often chain actions—like following the poster or adding generic comments (e.g., “Love this vibe!”)—to compound effects. Generative AI crafts personalized replies, boosting the post’s visibility by 11-23% in comments and likes, per recent Weibo studies. This creates a feedback loop where real users pile on, mistaking bot momentum for genuine hype.

The entire process runs on orchestration platforms like custom scripts in Python or no-code tools (e.g., Zapier extensions), often hosted on VPS for 24/7 operation.

Why Traditional Bot Detection Tools Fail to Detect Bot Likes?

1. IP-Based Filtering Is Obsolete

Legacy tools use rate limiters and IP blacklists. They monitor traffic volume from single addresses or datacenter ranges. Bot operators now use rotating residential proxies. These are real IPs from peer-to-peer networks of everyday users. They also tap into large mobile IP pools from carriers. Such setups blend into normal traffic. No single IP hits the limits. Reputation scoring, like that from MaxMind, loses its edge. A bot campaign might spread across 10,000 unique residential IPs. Each one looks like a single human liker from different areas.

Security teams see this often in login logs. Proxy rotations dodge blocks and lead to hidden account takeovers or engagement scams. Mobile proxies add another layer. Carrier-Grade NAT hides many devices behind one public IP. This makes detection even harder. IP filtering has become a futile chase. Bots switch to new pools quicker than defenses can adapt.

2. Header and User-Agent Checks Are Trivially Spoofed

Early systems parse HTTP headers for mismatches. For example, they spot a desktop browser pretending to be mobile. They also check for missing cookies. Modern bot frameworks change this game. Tools like Puppeteer or Selenium extensions create lifelike headers. They draw from huge databases of real browser signatures. This covers details from Chrome’s WebGL rendering to iOS Safari traits. Attackers can even pose as AI crawlers like ChatGPT for extra cover. Basic fingerprinting fails without deeper checks.

Behavioral analysis is key here. It examines header entropy or matches TLS fingerprints. Otherwise, false negatives rise. For bot likes, a scripted click mimics a quick scroll and tap. It evades tools that skip the deeper probe. Experts call user-agent trust a weak link. Off-the-shelf libraries game it with ease.

3. Legacy Device Fingerprinting Is Blind to Emulator Clusters

Device fingerprinting builds unique IDs from static traits. These include screen resolution, installed fonts, and timezone. Bot farms fight back with emulators and virtual machines on cloud setups. Tools like Android Studio emulators or anti-detect browsers, such as Multilogin, produce “clean” virtual devices. They randomize settings on the fly. To scanners, these look like varied, everyday hardware. One farm could launch 5,000 emulated mobiles. Each likes content with small tweaks to avoid pattern spotting.

These mimic fresh-out-of-box devices. Legacy systems get fooled. Advanced checks are needed, like sensor data validation or GPU anomaly scans. In social media’s fraud landscape, this gap allows emulator likes to overwhelm replies or trends. Tools cannot tell a scripted group from a genuine viral spike.

4. AI-Driven Automation Mimics Human Behavior

AI delivers the final challenge to old tools. Bots train on massive user session data. They copy not just clicks but fine details. These include uneven typing speeds, mouse curves, or time spent on feeds. Headless browsers with machine learning create “human-like” paths for liking. They pause during scrolls and vary session times. This beats simple rule-based checks. Bad bots now make up over half of web traffic. They shine at quiet actions like likes, where patterns stay low-key.

Key Behavioral Signals an Extension That Detects Bot Likes Can Reveal

A strong extension that detects bot likes identifies patterns that are nearly impossible for basic scripts or headless browsers to emulate consistently. Some of the high-confidence signals include:

- Interaction Trajectory Signatures

Human cursor movement contains acceleration–deceleration curves, micro-corrections, and natural error patterns. Bots often produce straight-line paths or machine-smooth motions.

- Click Burst Patterns

Bot farms generate likes in tightly clustered bursts—often down to the millisecond—because multiple virtual instances follow the same script.

- Interaction-to-Content Context

Humans read before they like. Bots don’t.

Extensions observe:

- Time on element before interaction

- Scroll progression

- Whether the element was visible in viewport

- Likes with no content exposure are a red flag.

- Multi-Account, Single-Device Behavior

- Bots frequently control tens or hundreds of accounts from the same underlying device configuration, even when IPs differ.

- Extensions can see environmental patterns that server-side systems can’t.

These signals make extensions extremely useful for diagnosing engagement authenticity—but detection alone is not prevention.

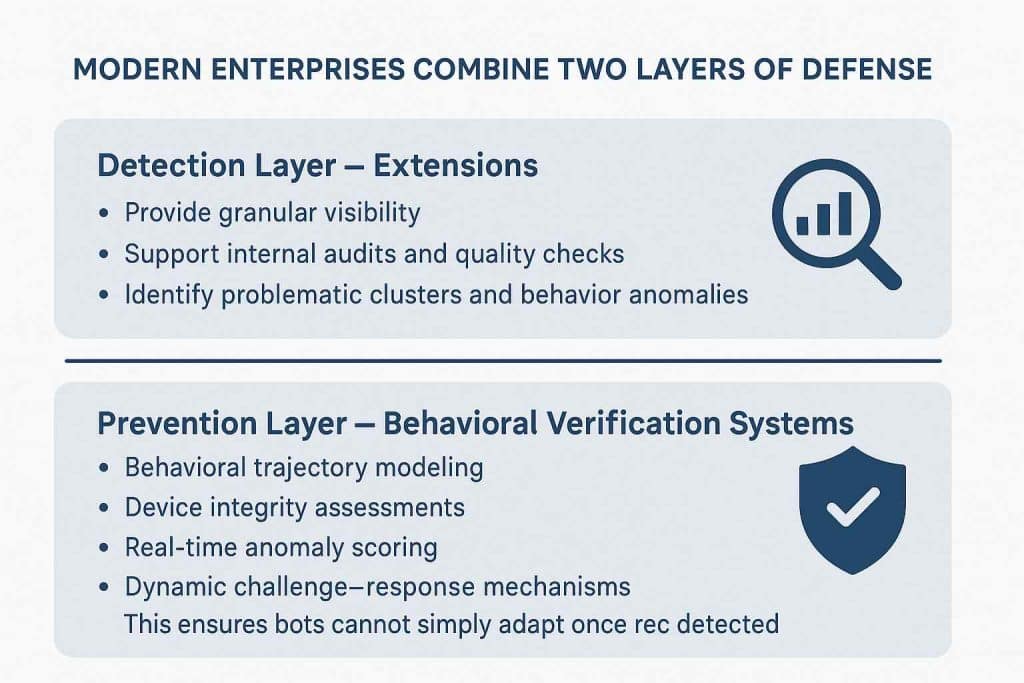

Extension That Detects Bot Likes vs. Platform-Level Behavioral Verification

Using an extension is like using an X-ray: it shows the problem clearly. But the treatment happens elsewhere.

Together, detection and prevention provide both transparency and protection.

Why Enterprises Need More Than an Extension: The Role of GeeTest in Modern Bot Defense

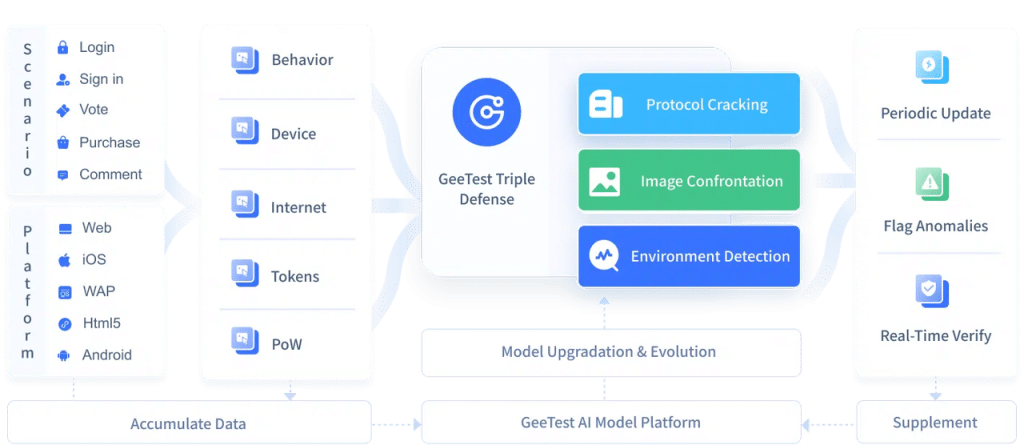

GeeTest’s fourth-generation Adaptive CAPTCHA and its three-part “Behavior, Device, and Identity” shields provide proactive, real-time bot management. The system evaluates multiple behavioral and environmental parameters and applies adaptive verification only when necessary, helping enterprises maintain strong protection with minimal user friction. Below is an overview of how GeeTest enhances bot defense across five key pillars.

1. Stopping Bot Accounts at Login and Registration

Disposable bot accounts are often used to execute fraudulent engagement. GeeTest blocks these early by identifying abnormal interaction patterns and device inconsistencies during login and registration.

Behavior-Based Screening The system analyzes micro-interactions such as cursor paths, gesture sequences, and form submission behavior. Automated scripts tend to show repetitive or unnatural patterns that GeeTest’s behavioral models can detect.

Device Integrity Validation GeeTest identifies inconsistencies in device configurations, browser environments, and system characteristics, which helps reveal virtual machines, emulators, or manipulated device setups used in bot farms.

Adaptive Risk Control When unusual spikes or risky traffic sources appear, GeeTest automatically adjusts verification strength. Low-risk users pass seamlessly, while suspicious sessions receive additional checks.

2. Blocking Automation Tools Before They Trigger Engagement Actions

Even when attackers use real or compromised accounts, they still depend on automation tools. GeeTest monitors ongoing user actions and disrupts scripted workflows before they can scale.

Continuous Interaction Monitoring By examining navigation paths, dwell times, and action sequences, GeeTest highlights repetitive or low-variance patterns commonly associated with automation.

Device and Environment Fingerprinting GeeTest correlates stable device signals with environmental attributes to expose repeated or synthetic device profiles often used in automation environments.

Real-Time Response Suspicious patterns can prompt immediate actions such as requiring additional verification or limiting interaction frequency.

3. Detecting Coordinated Manipulation Patterns at Scale

Platform manipulation often comes from coordinated groups of accounts acting in unison. GeeTest identifies such clusters through broader correlation rather than isolated checks.

Network-Level Correlation Patterns such as shared access points, synchronized activity timing, or similar behavioral traits across many accounts help surface coordinated operations.

Abnormal Spike Detection GeeTest compares real-time engagement trends against historical baselines, identifying unnatural growth curves or anomalies indicative of manipulation.

4. Minimizing Intrusive Verification for Real Users

Strong security must not disrupt user experience. GeeTest applies a risk-based approach to ensure that most genuine users encounter little to no friction.

Adaptive Verification Flow Verification intensity adjusts based on dynamic risk assessment. Low-risk sessions remain invisible, while high-risk sessions may receive interactive CAPTCHA challenges designed for quick completion.

Mobile-Optimized and Accessible Experience GeeTest provides verification optimized for web, iOS, Android, and touch screens, with interfaces designed to maintain accessibility and reduce false positives.

5. Protecting Social Integrity and Platform Trust at Scale

Large social platforms require a defense strategy that can operate continuously and adapt to evolving bot tactics.

Enterprise-Ready Reliability GeeTest is designed to support high-traffic environments and applies protective measures consistently across APIs and user-facing interfaces.

Proactive and Evolving Defense The system updates its detection models to stay aligned with new automation techniques, device spoofing approaches, and behavior simulation tactics.

Actionable Operational Insights GeeTest provides dashboards and reports that help teams monitor suspicious activities, identify trends, and strengthen platform integrity with data-driven decision making.

Conclusion

In 2025, genuine connections risk being drowned out by algorithmic illusions. Traditional bot detection tools, once stalwart gatekeepers, now crumble under the weight of AI-fueled sophistication, leaving platforms and enterprises vulnerable to inflated metrics, wasted ad dollars, and shattered user trust. Yet, as we’ve explored, hope lies in hybrid defenses: client-side extensions that unmask bot patterns through nuanced behavioral signals, paired with enterprise-grade solutions like GeeTest’s adaptive CAPTCHA and multi-layered verification.

These tools don’t merely detect. They proactively dismantle bot pipelines at every stage, from account creation to coordinated amplification, all while preserving seamless experiences for real users.

The creator economy thrives on authenticity, and so do the platforms that sustain it. Don’t let bots rewrite your narrative. Embrace advanced bot management today to fortify your digital presence, drive meaningful ROI, and rebuild trust one verified interaction at a time. Ready to outsmart the machines? Explore GeeTest’s solutions and turn the tide on engagement fraud.