AI fraud has rapidly become one of the most urgent cybersecurity challenges for businesses today. As AI-generated attacks grow more sophisticated—from deepfake executive impersonation to automated credential abuse—organizations must understand how AI scams work and how to build strong enterprise-grade defenses.

This article explains AI fraud for business, why companies are becoming primary targets, and how enterprises can proactively stop AI-powered attacks while protecting their customers, employees, and digital platforms.

What Is AI Fraud for Business?

AI fraud refers to cyberattacks in which malicious actors use artificial intelligence to automate, scale, or mimic human-like behavior to deceive enterprises. Unlike traditional scams that require manual effort, AI-generated attacks can analyze enterprise workflows, mimic internal communication styles, and exploit weaknesses across business systems.

Key AI technologies used in enterprise-targeted fraud include:

- Deepfake voice and video for executive impersonation

- Generative AI text for highly convincing phishing messages

- Synthetic identities that imitate real clients, partners, or vendors

- Automated scripts and AI agents that mimic employee or customer actions

For businesses, these attacks happen across finance, HR, supply chain, IT, and customer-facing systems.

Common Types of AI Fraud Targeting Businesses

2.1 Deepfake Executive Impersonation

Attackers use AI-generated voice or video to impersonate CEOs, CFOs, or key leaders. They request urgent payments, confidential documents, or authorization overrides. These attacks are especially dangerous because they mimic known authority figures.

2.2 AI-Generated Phishing & Social Engineering

Email, chat, and messaging attacks now look nearly perfect:

- AI-generated emails tailored to job roles

- Fake supplier or partner requests

- Fraudulent instructions sent via Slack, Teams, or WhatsApp

Employees often cannot distinguish real messages from AI-generated ones.

2.3 Synthetic Identity and Supplier Fraud

AI tools create realistic but entirely fake:

- Vendor profiles

- Contractor accounts

- Customer onboarding requests

These synthetic identities infiltrate B2B systems and manipulate enterprise workflows.

2.4 Automated Credential Attacks

AI accelerates traditional credential abuse by:

- Testing millions of passwords in seconds

- Mimicking human typing and timing patterns

- Exploiting enterprise SaaS platforms or legacy portals

Businesses with fragmented authentication systems become more vulnerable.

2.5 AI Manipulation of Enterprise Platforms

Attackers automate suspicious activities inside:

- Customer portals

- Partner systems

- Internal applications

These actions blend in with legitimate user behavior, making detection difficult.

Why Businesses Are Increasingly Vulnerable

AI fraud succeeds not because businesses are weak, but because the attack surface has grown dramatically. Key reasons include:

3.1 Distributed Teams and Multiple Access Points

Remote work and global teams rely on various communication channels—email, messaging apps, SaaS tools—creating more opportunities for impersonation and infiltration.

3.2 Expanding Digital Ecosystems

Enterprises integrate dozens of vendors, partners, and external systems. Attackers exploit these interconnected environments through synthetic identities or fake supplier requests.

3.3 Staff Cannot Easily Identify AI-Generated Content

Even trained staff struggle to detect AI-generated emails or deepfake voices. Attackers know this and target finance, HR, and customer service teams directly.

3.4 Legacy Defenses Are Not AI-Aware

Many businesses still rely on traditional fraud tools built to stop simple rule-based or signature-based threats—not intelligent, adaptive attacks.

3.5 The Cost of a Single Mistake Is High

For enterprises, one compromised identity or one fraudulent authorisation request can cause:

- Financial transfers to attackers

- Data leakage or system compromise

- Compliance violations

- Long-term brand damage

How Businesses Can Detect AI Fraud Early

4.1 Look for Behavioral and Communication Anomalies

Even when AI-generated content looks right, subtle signals often reveal inconsistencies:

- Tone, urgency, or phrasing that does not match internal norms

- Messages requesting bypasses of standard workflows

- Unexpected communication timing

4.2 Deepfake Detection and Verification

Implementing audio/video integrity checks helps detect manipulated content. Protocols for verifying executive instructions through secondary channels are crucial.

4.3 Strengthening Email and Messaging Security

Enterprises should deploy AI that analyzes:

- Abnormal sender behaviors

- Suspicious patterns in communication

- Risk signals in vendor or partner interactions

4.4 Enterprise Identity Verification

Strong identity controls ensure that users—employees, suppliers, customers—are who they claim to be:

- MFA and SSO

- Device intelligence

- Adaptive authentication

- Access behavior analysis

How to Stop AI Scams for Business

To effectively stop AI fraud, businesses must adopt modern technical defenses capable of detecting AI-generated activity, authenticating real users, and securing enterprise systems. Below are the key technical measures companies should implement:

5.1 AI-Based Behavioral Analysis

Deploy behavioral analysis systems that evaluate user interaction patterns (mouse movement, keyboard behavior, navigation flow, timing, etc.). Because AI-driven scripts or bots — even advanced ones — often fail to fully emulate human-like behavioral nuances, this technique helps distinguish real users from automated attacks.

Behavioral analysis is especially useful in registration, login, payment authorization, supplier onboarding, and other high-risk workflows.

5.2 Device & Environment Intelligence

Implement device fingerprinting and environment detection to analyze device/browser attributes, network environment, and other metadata. Detect anomalies such as headless browsers, emulators, automation frameworks, VPN/proxy usage — all common signs of bot or AI-driven fraud.

This adds a robust layer beyond identity or credentials, validating the context of each access attempt.

5.3 Real-Time Risk Engine Combined with Business Rules

As AI fraud evolves, a static risk engine alone is insufficient. Enterprises must integrate their business logic and rules into the risk engine, so detection adapts with business workflows and policies.

- Risk evaluation should consider not only general behavior and device signals, but also business-specific context: e.g. unusual payment amounts, unexpected vendor changes, frequency of high-risk transactions, or cross-system authorization requests.

- The decision engine must be flexible and configurable: as business processes change (e.g. new approval flows, vendor onboarding rules, payment thresholds), security rules must be updated and immediately reflected in risk evaluation.

- This combination ensures that risk detection aligns with real business operations, reducing false positives and false negatives, and making defenses resilient to evolving fraud patterns.

By blending real-time behavioral/device risk signals with customizable business-rule triggers, enterprises can detect and block suspicious requests that deviate from expected business workflows — even if those requests come from seemingly legitimate accounts.

5.4 Strong Identity & Access Controls

Use multi-factor authentication (MFA), Single Sign-On (SSO), role-based access control (RBAC), and privileged account management (PAM). Combine with adaptive authentication — requiring additional verification when risk signals trigger — especially for high-risk actions like payments, permission changes, or data exports.

This reduces the damage potential even if credentials are compromised or account takeover is attempted.

5.5 Secure All Entry Points and Critical Workflows

Ensure all access points — web portals, mobile apps, APIs, vendor portals — are protected. Use device intelligence, behavioral analysis, adaptive verification, and business-rule based risk engine across registration, login, supplier onboarding, payment, and administrative actions.

This “security everywhere” approach ensures attackers cannot exploit less-protected entry points to infiltrate core enterprise systems.

The Future of AI Fraud Prevention for Business

AI-powered attacks will become more adaptive and sophisticated. In the near future, businesses must prepare for:

- Autonomous AI agents that operate across multiple channels (voice, video, chat, automation).

- Multi-channel impersonation combining deepfake media, synthetic identities, and automated scripts.

- Highly adaptive, multi-stage attack sequences targeting different business workflows.

- Regulatory and compliance demands for identity verification, provenance tracking, and auditability.

Only enterprises that build intelligent, AI-ready, business-aware defenses — combining behavioral analysis, device intelligence, content verification, and business-rule integration — will stay ahead of these threats.

How GeeTest Helps Businesses Stop AI Fraud

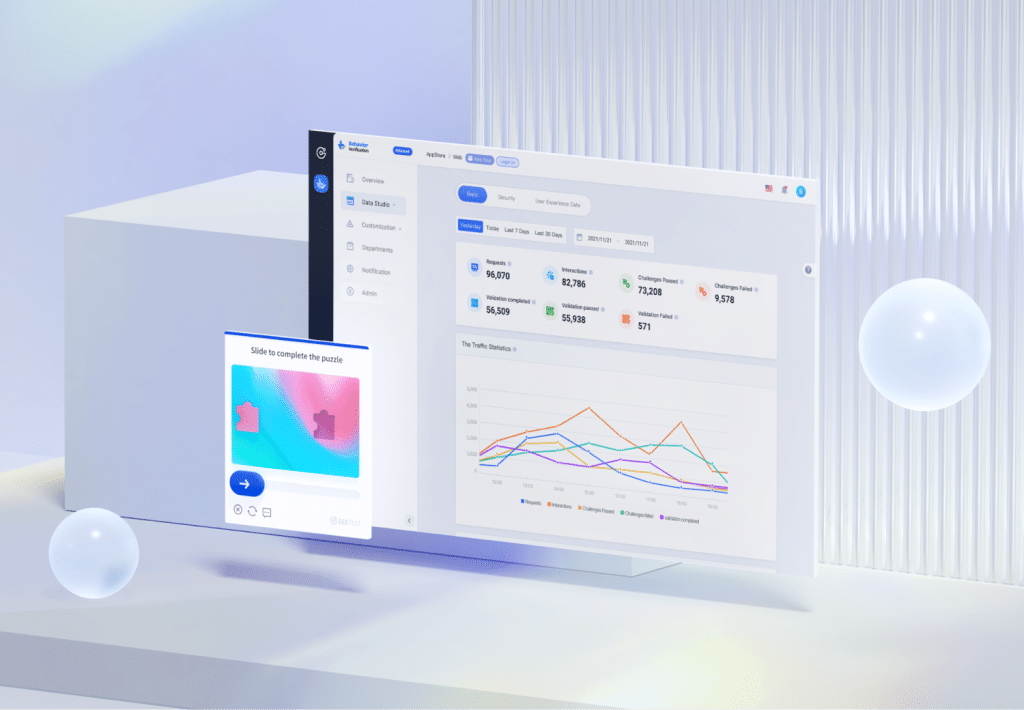

GeeTest provides enterprise-grade solutions that directly combat AI scams by combining behavioral analysis, device intelligence, and business-rule enforcement to protect critical business workflows.

Adaptive CAPTCHA & Behavior Verification

Keeps AI-driven attacks from compromising key workflows like login, registration, and payments.

- Detects AI-generated or automated bot activity

- Monitors mouse movements, touch gestures, typing patterns, and interaction rhythms

- Dynamically adjusts challenge difficulty according to risk level

- Secures high-value transactions, supplier onboarding, and sensitive approvals without affecting legitimate users

Business Rules Decision Engine (BRDE)

Aligns fraud detection with real business processes to catch context-sensitive threats.

- Embeds enterprise-specific business logic into risk evaluation

- Flags unusual payments, vendor changes, or abnormal account activity in real time

- Allows dynamic updates as business workflows evolve

- Reduces false positives while enforcing policy-driven security

Device Fingerprinting & Environment Intelligence

Adds an extra layer of defense by verifying device and environment integrity.

- Tracks device attributes, browser, and network environment

- Detects headless browsers, emulators, proxies, or automation frameworks

- Maps accounts to device histories to identify suspicious access

- Enhances protection beyond credentials and behavioral signals

Seamless Integration & Flexible Deployment

Provides flexible deployment options across all enterprise platforms.

- Supports web, mobile apps, and APIs

- Offers visible CAPTCHA, invisible verification, and backend API integration

- Customizable UX ensures minimal disruption to legitimate users

- Includes real-time monitoring and analytics dashboards for rapid threat response

Conclusion

AI fraud has evolved into a major threat for businesses, targeting critical workflows, customer accounts, and enterprise systems with AI-generated attacks. To effectively stop these threats, companies must adopt a combination of behavioral analysis, device intelligence, business-rule integration, and adaptive verification.

Solutions like GeeTest provide the necessary tools to detect AI-driven activity, enforce business-aware risk policies, verify devices and sessions, and secure key entry points without disrupting legitimate users.

Protect your business today with GeeTest and secure your key workflows against AI fraud.