Fraud does not always start with stolen passwords or compromised accounts. Often, the real threat begins when a new account is created. Fraudsters increasingly use artificial intelligence to generate fake identities at scale, creating accounts with realistic names, photos, and activity patterns that bypass traditional defenses. These AI-generated accounts are then used for spam, phishing, synthetic identity fraud, and large-scale scams across e-commerce, finance, and social media. The result is distorted business data, financial losses, and declining user trust.

Recent statistics illustrate the scale of the problem. Facebook removed over a billion fake accounts in a single quarter, while investigations in the financial sector uncovered millions of fabricated profiles that undermined compliance and customer records. Synthetic identity fraud, which blends real and fake personal information, is projected to cost the United States 23 billion dollars annually by 2030. For businesses, understanding how fake accounts are created and implementing measures to detect and prevent them is critical to maintaining security and trust.

What is Fake Account Creation?

Fake account creation refers to the deliberate establishment of online accounts using false, stolen, or fabricated personal information. These accounts are designed to appear legitimate and can range from simple profiles with altered names or birthdates to fully constructed identities complete with photos, backstories, and digital footprints. In many cases, attackers gradually build a history of activity or simulate normal user behavior to make these accounts less likely to be flagged by automated detection systems.

The motivation behind creating fake accounts is diverse. On e-commerce or subscription-based platforms, such accounts can be used to exploit promotions, manipulate sales metrics, or commit financial fraud. On social media, fake profiles may serve to amplify content, disseminate misleading information, or target specific users with harassment. The creation process often relies on automation, with bots and scripts capable of generating large volumes of accounts in a short time, making manual verification or detection extremely challenging.

These accounts can also be tailored to fit particular objectives. A fabricated seller persona might post fictitious products to scam buyers, while a gaming persona could manipulate rankings or accumulate virtual assets illicitly. The widespread availability of stolen personal data, combined with accessible tools and services that facilitate automation, has made it increasingly easy for malicious actors to flood platforms with fake identities.

Organizations face a delicate balance in defending against fake accounts. Tight registration verification can deter legitimate users and reduce conversion rates, while lax sign-up procedures can be exploited, leading to financial loss, reputational damage, and degraded user experience. Understanding the mechanisms and implications of fake account creation is therefore essential for businesses seeking to protect both their platforms and their users.

How Are Fake Accounts Created?

Creating fake accounts is often a systematic process rather than a single action. Many digital platforms request minimal information during registration, usually including a name, email, and phone number, to simplify user onboarding. While convenient for legitimate users, this approach also makes it easy for attackers to register large numbers of fraudulent accounts.

Attackers frequently use stolen consumer data obtained from breaches or acquire information through illicit sources. This data, combined with fabricated or partially synthetic identity details, allows the creation of accounts that appear legitimate. To scale these operations, fraudsters employ automated tools and bot frameworks that simulate human behavior, including adding delays between actions and navigating site flows in realistic ways. Some also utilize specialized services that provide CAPTCHA solvers, proxy servers, disposable emails, or virtual phone numbers, enabling faster and less detectable account creation at scale.

The process usually involves three key stages:

- Data collection: Attackers gather information from stolen records, fabricated identities, or a combination of both. This data can include names, birthdates, email addresses, phone numbers, and other personal identifiers.

- Automation setup: Using scripts or bot frameworks, attackers automate registration, including solving verification challenges and managing disposable credentials. Automation allows thousands of accounts to be created in a short time while imitating real user behavior to avoid detection.

- Account creation and deployment: Fraudsters complete the registration process using synthetic identities or repurposed credentials. Some sell or rent these accounts, while others use them to exploit platform services, manipulate engagement metrics, or support other fraudulent activities.

Scaling these campaigns often involves the use of temporary email services, email aliasing features, or virtual phone numbers for verification purposes. Bots may rotate IP addresses, randomize headers, and throttle requests to mimic human activity. Once accounts are created, they can be used to exploit free services, inflate engagement metrics, or participate in coordinated malicious campaigns.

By combining minimal registration requirements, stolen or synthetic data, and automated tools, attackers can generate fake accounts efficiently at scale, creating ongoing challenges for platform security and user trust.

How Criminals Exploit Fake Accounts?

Fraudulent accounts are rarely created without purpose. Once established, they are deployed across industries and platforms to fuel a wide range of malicious activities:

- Advertising manipulation: Attackers use fake profiles to generate artificial clicks and impressions, misleading advertisers about campaign performance and wasting marketing spend. Inflated engagement metrics also make it difficult to distinguish genuine audience behavior.

- Social engineering and infiltration: Fraudulent accounts can impersonate legitimate users or organizations, sending connection requests, messages, or reset prompts that pave the way for identity theft or phishing attacks.

- Testing stolen credentials: Large sets of username and password pairs obtained from breaches are validated through waves of fake accounts. This method enables criminals to exploit password reuse across different platforms.

- Influence operations: Coordinated networks of fake accounts amplify divisive narratives, spread misleading information, or simulate grassroots support for specific agendas, creating the illusion of legitimacy and reach.

- Promotional and trial abuse: Free trials, bonus offers, or loyalty rewards are repeatedly exploited by registering multiple fake profiles. This allows fraudsters to access premium services without cost while inflating participation numbers.

- Market distortion through reviews: Fraudulent accounts post fabricated ratings and testimonials to boost certain products or damage competitors. These manipulative tactics undermine consumer trust and distort fair competition.

- Malware and phishing delivery: Some accounts appear benign until activated to spread harmful links, malicious attachments, or phishing messages. The apparent authenticity of these accounts increases the success rate of attacks.

- Financial crimes: Fake accounts are also instrumental in obfuscating illicit transactions. Criminals route funds through layers of fabricated identities to mask origins and complicate regulatory oversight.

- Community manipulation: In online forums or social platforms, fabricated personas post repetitive or coordinated comments to push narratives, influence discussions, or create false consensus.

- Subscription exploitation: Fraudsters use stolen or fabricated payment data to acquire paid subscriptions, later reselling access at discounted prices through underground markets.

What’s the Impact of Fake Account Creation on Business?

The impact of fake account creation extends far beyond simple numbers. For businesses, these accounts erode trust, inflate costs, and expose organizations to legal and reputational risks.

Reputational Damage

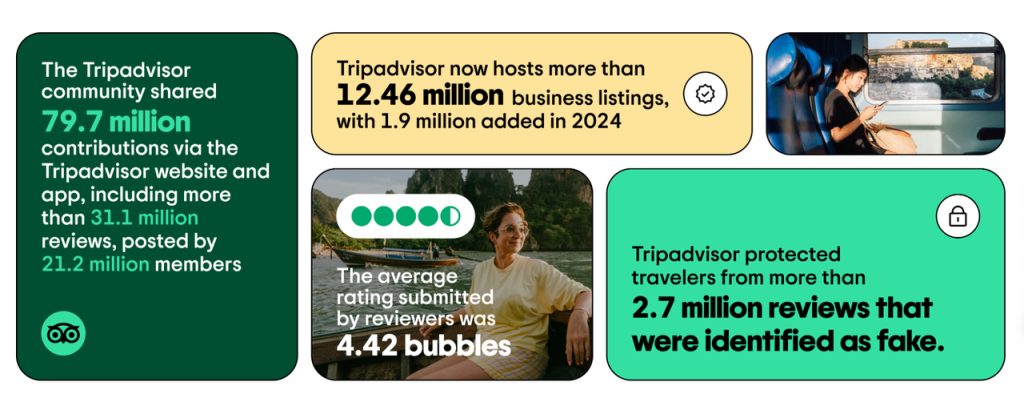

Fake reviews can significantly distort consumer perception. In 2024, Tripadvisor reported removing more than 2.7 million fraudulent reviews, many of which were linked to paid review campaigns. Such manipulation not only undermines customer trust but also damages the credibility of legitimate businesses.

Financial Losses

Advertising fraud driven by fake accounts drains marketing budgets on a global scale. Spider Labs’ 2025 Ad Fraud Report estimated that digital ad fraud caused businesses to lose more than 37.7 billion USD in 2024 alone.

Security Threats

Fake accounts are often used in phishing, credential stuffing, and large-scale scams. In late 2024, Meta removed over 2 million fake accounts connected to scam operations in South and Southeast Asia, many of which were linked to investment fraud and “pig-butchering” schemes (Forbes). In 2025, Meta further reported removing about 6.8 million fraudulent WhatsApp accounts used by organized scam centers.

Market Manipulation and Misinformation

Networks of fake accounts amplify false narratives or create artificial consensus. This can influence consumer sentiment, sway political opinion, or destabilize public discourse, making platforms less reliable sources of information.

Fake accounts therefore do not just distort analytics; they create systemic risks that can damage businesses financially, operationally, and reputationally.

How Can Businesses Detect Fake Accounts?

Fake account creation can often bypass basic validation. Attackers may provide legitimate-looking emails, solve CAPTCHAs, and simulate typical browser behavior. When these attempts are performed at scale, however, subtle patterns appear that distinguish them from genuine users. Detecting fake accounts effectively requires analyzing volume, environment, and behavior.

Monitoring volume anomalies Automated attacks frequently generate large spikes in registrations, especially during unusual hours or promotional events. Sudden surges in new signups can indicate mass account creation. Other signs include low engagement after registration or clusters of accounts created with the same referral codes or promotional links.

Identifying environmental inconsistencies Automation often leaves digital traces. Multiple accounts originating from the same IP ranges, repeated device fingerprints, or similar browser configurations can indicate bot activity. Disposable email addresses, Gmail alias manipulation, and virtual phone numbers are also commonly used in coordinated fake account attempts.

Analyzing behavioral irregularities Bots struggle to mimic natural human behavior across an onboarding process. Suspicious signs include skipping profile setup, entering repetitive or random data, and immediately attempting to exploit referral programs or promotions. Behavioral patterns such as typing cadence, navigation sequences, and post-registration activity can reveal inconsistencies.

Account details verification Cross-referencing registration information with third-party sources can help identify suspicious accounts. Services that validate email addresses, phone numbers, or identity documents make it easier to distinguish between real and fabricated users.

Transaction and device correlation Assessing device characteristics, network attributes, and geographic data in combination with account information can highlight anomalies. Multiple accounts created from the same device or IP cluster, even with proxy rotation, are often the result of automated attacks.

Advanced bot detection As artificial intelligence enables the creation of increasingly realistic synthetic identities, distinguishing between human and bot behavior becomes more challenging. Machine learning models that analyze device traits, behavioral signals, and patterns in the registration flow are essential to detect sophisticated attacks.

Detection of fake accounts is important for protecting both users and businesses. However, reactive measures alone are not sufficient. Suspicious accounts may have already caused harm before they are flagged. Combining detection with proactive prevention measures is critical to stop malicious actors during the registration process.

Strategies to Prevent Fake Account Creation

Stopping fake accounts before they enter the system is the most effective defense. Businesses can adopt a combination of verification, technical barriers, and intelligent monitoring to protect their platforms while keeping the experience smooth for real users.

Key strategies include:

- Behavioral monitoring: Track unusual spikes in registrations or repeated failed attempts. Automated analysis of user journeys can flag suspicious activity early.

- Honeypot fields: Place invisible fields in registration forms. Legitimate users will not interact with them, but bots often do, making detection seamless.

- User verification: Validate accounts with email or phone confirmation. One-time passwords or magic links ensure that contact details are genuine.

- Account creation limits: Restrict the number of registrations from the same IP address, device, or email domain within a given timeframe to prevent mass sign-ups.

- Multi-factor authentication (MFA): Add an extra verification step during onboarding, such as SMS or app-based codes, to block automated sign-ups.

- Advanced bot detection: Use adaptive solutions that analyze device, network, and behavioral signals. Unlike basic tools, modern detection systems can identify sophisticated bots without adding friction for real users.

How GeeTest Stops Fake Account Creation

GeeTest provides a multilayered solution that stops fraudulent registrations at the source while preserving a seamless experience for legitimate users.

Adaptive behavioral analysis

GeeTest analyzes real-time behavioral signals generated during the signup process, including mouse movements, touch gestures, and interaction patterns. This makes it possible to distinguish humans from bots with high accuracy.

Dynamic challenge system

Unlike static CAPTCHAs that can be solved cheaply through farms or automation, GeeTest challenges evolve dynamically based on the risk level of each request. Suspicious users encounter stronger challenges, while trusted users enjoy frictionless login and signup.

Bot mitigation at scale

GeeTest detects and blocks automated tools that generate thousands of accounts in minutes. By identifying anomalies in device fingerprints, IP reputation, and interaction flows, it prevents large-scale fake registrations from ever completing.

Low-friction user experience

Security measures often frustrate legitimate users. GeeTest is designed to minimize disruption by only escalating challenges when abnormal behavior is detected, keeping genuine onboarding smooth and secure.

Proven global reliability

Trusted by over 360,000 websites and apps across industries such as fintech, ecommerce, and gaming, GeeTest stops fake accounts before they cause financial losses, distort KPIs, or damage customer trust.Fraud does not always start with stolen passwords or compromised accounts. Often, the real threat begins when a new account is created. Fraudsters increasingly use artificial intelligence to generate fake identities at scale, creating accounts with realistic names, photos, and activity patterns that bypass traditional defenses. These AI-generated accounts are then used for spam, phishing, synthetic identity fraud, and large-scale scams across e-commerce, finance, and social media. The result is distorted business data, financial losses, and declining user trust.